Category: 3.5 Digital Witnesses

Textual Criticism

When I started into this section of the project, I briefly mentioned some concepts related to textual criticism: Transmission, Witnesses, and Collation. In a casual fashion, we have been exploring the transmission of Aladore, how the text is embodied in specific witnesses. Soon we will more carefully collate our digital witnesses in pursuit of a good Reading text. But, now is a good time to step back and reflect about transmission.

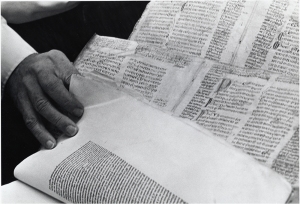

“Close-up of male hand with manuscript in Bridwell Library,”

Southern Methodist University [Flickr Commons],

http://www.flickr.com/photos/smu_cul_digitalcollections/8679147466

Does every letter and every word match in our different versions of Aladore? Editors and typesetters might fix or add errors. A printing press may introduce some anomaly. Our copy might have a stain across a page. Our digital version may be missing something…

Texts are always alive and are never static.

More Textual Criticism

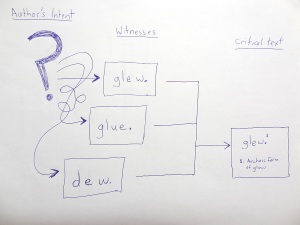

So to illustrate the idea of textual criticism I drew this crummy picture for a short presentation about a year ago:

Somewhere over on the left is a mythical-romantic-ideal world where the true text lives, following the True/Authentic Author’s Intention (or some other silly thing).

But in our real world, ALL we have is a bunch of imperfect manifestations—each a little different. We know that transmission introduces all sorts of errors, alterations, and versions (accidental or intentional).

So we gather up all the existent “witnesses”, try to understand their relationships, and using a bit of art and science reconstruct/craft the best possible text (traditionally defined as “the closest approximation of the original”).

Combine this text with the editor’s “critical apparatus” (the list of manuscripts collated, analysis, notes about variants, etc.) and you get a Critical Edition. (Of course this project is not aiming at a critical edition, so we definitely won’t have the apparatus, or even worry much about the variant readings or “authentic” text, but I want to be thoughtful anyway…).

The words in the drawing are from a real example, Andrew Marvell, To His Coy Mistress (~1650). Wilfred L. Guerin, et al., A Handbook of Critical Approaches to Literature 5th edition (New York: Oxford University Press, 2005) explains that the existing witnesses had four variants for the last word in the second line of this passage:

Now therfore, while the youthful hue

Sits on thy skin like morning [glew; glue; lew; dew].

And while thy willing soul transpires

At every pore with instant fires,

Now let us sport us while we may;

And now, like am’rous birds of prey,

Rather at once our time devour,

Than languish in his slow-chapp’d power.

Let us roll all our strength, and all

Our sweetness, up into one ball;

And tear our pleasures with rough strife

Thorough the iron gates of life.

Thus, though we cannot make our sun

Stand still, yet we will make him run.

Basically, the original manuscript had the word “glew” which later editors couldn’t make sense of—they thought it was a alternate spelling of “glue” and thus a non-sense mistake. Some editors changed it to “lew” (dialect for warmth) and others to “dew”. Nearly all editions, textbooks, anthologies, and websites still give it as “dew” today (for example, check out the full poem on Poetry Foundation: http://www.poetryfoundation.org/poem/173954 )

However, the most recent critical editions restore the text to “glew”, arguing it is actually a dialect word for “glow.”

But why are Critical Editions so important to scholars?

- they provide the raw materials for study.

- they create an authoritative common text that can be referenced.

- prestige, they can personally make their mark on an author.

Once you have one critical edition why would you ever need another??

- new evidence is discovered.

- new interpretations of the evidence.

- there is always copy-editing errors, text reproductions continually degrade.

- editorial techniques, styles, and conventions change over time–this is an art!

Critical understanding of the transmission of a text illuminates the life of the work.

Editing [scholarly or otherwise] is part of keeping a text alive.

Digital Text

But now we (and our texts) live in the Digital world. What does that mean for transmission?

I think texts are getting crazier… Texts on paper, even hand copied, are fairly static and stable over centuries. How we interpret them changes a lot, but the witnesses are pretty solid.

Digital texts on the other hand are so easy to change, copy, distribute, re-distribute, etc., etc. The internet is a textual Wild West where everyone copies back and forth from each other, text is constantly transforming. It is almost impossible to trace its life as it tumbles through the digital world.

The last few decades have seen a chaotic proliferation of free online books, often of highly suspect quality and consistency, almost always with poor metadata. Projects such as the Universal Library/Million Book Project rushed to create scanned copies of, well, millions of books–most of which are terrible quality. Pages are missing, images are out of focus or capture a hand, files got jumbled (you can some of the wreckage on Internet Archive: https://archive.org/details/millionbooks)… These digitized images of books are supposed to be a type of facsimile edition–attempting to exactly represent the qualities of the original book. However, simple images of pages do not take advantage of the digital medium.

To enable search functions, OCR is used to create transcriptions of the images–essentially creating a new version of the text, a low accuracy machine generated/interpreted version… Since the OCR is machine readable, it is used to generate all sorts of other formats such as epubs or txt. Few of these receive any human editing.

However, as early as the 1970’s, Project Gutenberg was creating ebook editions of public domain works specifically focused on general Reading. They are not attempting to create authoritative or critical editions. Nor are they trying to exactly reproduce a specific print copy of the work. Instead, the texts are proof read and edited by real people, to generate good Human readable ebooks. Anyone (i.e. YOU) can volunteer to become a proofreader, check out their Distributed Proofreaders: http://www.pgdp.net

This project follows on that type of tradition.

However, there is a lot of other directions you can go with digital texts. There is growing demand for academic quality texts to use as raw material for digital analytic techniques. Standards such as Text Encoding Initiative (TEI) offer possibilities of enriching text with semantic markup.

You can also do a lot of neat stuff with online readers to create digital editions that collate and display several variant texts together, or a critical text with the variant readings as hyperlinks. For example, check out the “Online Critical Pseudepigrapha”: http://ocp.tyndale.ca

A digital text can represent the extremely complicated nature transmission, supporting multiple readings of the text (set in a historical context) rather than a single critical edition. For example, check out the “Homer Multitext Project”: http://www.homermultitext.org/about.html

Anyway, forget about all that–I will get back to Aladore soon…

Working with PDF?

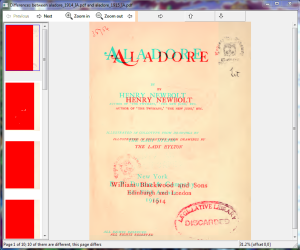

As I outlined earlier, Aladore was only scanned twice (a 1914 and a 1915 edition), but there are many different access derivatives available online with mysteriously different qualities.

Internet Archive, Hathi, and Scholars Portal Books each provide a “read online” feature, but if you want to download your own copy, it will be a PDF of course.

PDFs are kind of strange files. Standardized and portable, yet so much variation in how they behave depending on the elements hidden inside.

Here is a comparison of the available PDFs to get a sense of the range,

Aladore 1914:

- Internet Archive, 418 pages, 10.92 MB, produced by LuraDocument, OCR

- Scholars Portal Books, 418 pages, 57.29 MB, produced by LuraDocument, OCR

LuraDocument is a compression and OCR software used for processing individual page images into a PDF. These two files are identical, except that IA has done a ton more compression on their PDF. Because of it, the IA PDF is very slow to turn pages (render) and is very ugly. It is terrible to read even on a laptop, much less a ereader. Making smaller file size was a bigger concern in the early 2000’s–now days, IA should be putting up better quality.

Aladore 1915:

- Internet Archive, 416 pages, 13.57 MB, produced by LuraDocument, OCR

- Hathi Trust, 417 pages, 122 MB, produced by image server / pdf::api3, no OCR

Again, the IA version is mangled by compression. They don’t always do this! For example, check out the Blackwood’s Magazine, Vol. 195 (January to June 1914) where Aladore was first published. I already mentioned how much I dislike the Hathi version, due to the way they embedded the page image in a standard 8×10 background and add a watermark. The “image server / pdf::api3” is a Perl module for creating PDFs. It is doing the equivalent of “print to PDF” on the fly from the content server’s jpeg images.

You might notice that the page numbers don’t quite line up. The Hathi version has one extra page because they add a digital object title page to the front of the book. However, that still means that the 1914 scan has 418 pages, and the 1915 scan has 416. When scanning books, skipping a pair of pages is the most likely mistake–so our 1915 scan may have skipped two pages… or the printing omitted two pages somewhere? or they were torn out by some angry reader in 1923?? The front and back matter look identical, so I will have to take a closer look.

If you really want to learn about the PDF format, here is three resources:

IDR Solutions blog has a large series of article about the technical and practical aspects of PDFs, “Understanding the PDF File Format: Overview,” https://blog.idrsolutions.com/2013/01/understanding-the-pdf-file-format-overview/#pdf-images

iText RUPS (Reading and Updating PDF Syntax) is a java program that allows you to browse the internal file structure of a PDF–its a maze, but very interesting. It can be helpful for “de-bugging” PDF files, but the editing features are not functional yet. http://sourceforge.net/projects/itextrups

Once you peek inside the secretive contents of the PDF, just flip through your own copy of the handy Adobe PDF Reference to understand what you are seeing, its only 756 pages long! http://wwwimages.adobe.com/content/dam/Adobe/en/devnet/pdf/pdfs/PDF32000_2008.pdf

The PDF standard was first developed by Adobe, but is now maintained by ISO, so you can buy the official copy of ISO 32000-1:2008 for a mere 198 Swiss francs. Or get the complete documenation for free from Adobe, http://www.adobe.com/devnet/pdf/pdf_reference.html

Not that I would ever check out this junk!

Anyway, after looking at these PDFs I realized it would be much better to NOT work with them… and I figured out a workaround! More on that soon.

Digitization

Before we leave the “Witnesses” section of the project, I think we should take a look at digitization. We are dealing with digital images of old print books, so the process of creating them has a major impact on the transmission of our witnesses.

A typical book digitization workflow goes something like this:

- Get a book (some intelligent administrative system for deciding what to digitize, hopefully? If you just scan what exactly is digitized in the world, you will realize it is unfortunately not often guided by a very careful plan. Much digitization is still purely ad hoc off the side of the desk…)

- Scan the book to create digital images

- Process/edit the digital images

- Prepare access/display images (and hopefully archival master images for storage)

Here is a bit more detail on the process. For scanning books there is basically two options:

- Non-destructive: use a book scanner (such as ATIZ BookDrive). These specialized scanners have a cradle that holds the book open in a natural angled position (rather than flat). They usually have two cameras on a frame so that one is pointed at each page of the opened book. To scan, you turn the page and pull down a glass platen to hold the book in place. The cameras quickly take an shot of each page. Lift the platen, turn the page… etc. etc. etc. It takes a lot of tedious human work, but the results are good (assuming the operator keeps their fingers out of the image, check out the Artful Accidents of Google Books for some problem examples). Usually this is done by digitization labs at big universities or organizations such as Internet Archive. However, there is also a vibrant DIY community. Check out DIY Book Scanner to get started, http://www.diybookscanner.org!

- Destructive: If the particular copy of the book you have is no longer valuable, it can be disbound to make scanning faster and easier. A specialized device literally cuts the binding off the book. With the pages loose, whole stacks can be automatically feed into a document scanner. Feed it into something like these Fujitsu Production Scanners and the whole book will be scanned in a couple minutes. Here is a blog post from University of British Columbia about destructive scanning: http://digitize.library.ubc.ca/digitizers-blog/the-discoverer-and-other-book-destruction-techniques

Scanning a book results in a huge group of image files. If you are using a camera based book scanner these are usually in a RAW format from the camera’s manufacturer. Other types of scanners will usually save the page images as TIFFs. These unedited scan images usually need to be cropped to size and enhanced. The readability of the image can usually be improved by adjusting the levels and running unsharp mask. The edited files will usually be saved as TIFFs, since it is the most accepted archival format.

Now that you have all these beautiful, readable TIFFs, you need to make them available to users, that is create access copies and provide metadata. The edited TIFFs are usually converted into a smaller sized display version for serving to the public online. For example, the book viewers on Hathi and Internet Archive use JPEGs. You can check this by right clicking on an individual page and viewing or saving the image (the viewer on Scholars Portal Books also uses JPEGs, but has embedded them in a more complex page structure, so you can’t just right click on the image). This step is often automated by a digital asset management system. Other sites only provide a download of as a PDF. PDF versions are usually constructed using Adobe Acrobat or ABBY FineReader (many scaning software suites also have this functionality), combining the individual page images into a single file. PDF creation usually compresses the images to create a much smaller file size. OCR is completed during this processing as well.

The Aladore PDFs from Internet Archive and Scholars Portal Books (U of Toronto) were created using LuraDocument. This is a system similar to ABBY FineReader, which takes the group of individual page images, runs OCR, compresses the images, and creates a PDF. OCR used this way allows the text to be associated with its actual location on the page image, thus making the PDF searchable (For my project, I don’t want to create a PDF and I don’t care about the location of the text on the page–I just want the text, so my workflow will be a little different).

If you compare the two PDFs, as I have ranted a bunch of times now, you can see that IA’s version is horrible! The pages load very slowly and images look bad. None-the-less they started with exactly the same images as the U of T version. They just used much higher compression. This creates a smaller file size (IA 11 MB vs. UoT 57 MB), but the image quality is much worse and rendering time is increased.

So, I guess the point is: like the ancient world of scribes hand copying scrolls, textual transmission is still an active human process. It is full of human decisions and human mistakes. The text is not an abstract object, but something that passes through our hands, through the hands of a bunch of scanner operators anyway…

3.5 and digital skew

“And as he heard it Ywain forgot all the ills that he had suffered in all his life, and he thought on such a place as might be the land of his desire” (p.142)

I suddenly felt like category #3 was getting too big… so I broke it into two sections, “3. Witnesses” and “3.5 Digital Witnesses.” Sorry for any confusion this could cause, but I am guessing it will be OKAY.

I am almost done gathering the all the files needed for the next stage! Aren’t you dieing to learn all about it?

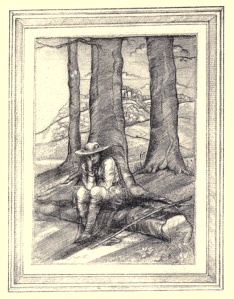

The image above shows some issues of interpretation of the digital witness. Its clearly not square. Is this an original feature of the print? Or is it distortion introduced by photography or post processing? Or both? I can correct it with some editing, but then I am falsely representing the digital witness at the least…

I am always tempted to crop off the UGLY false frame printed around the illustrations. But that wouldn’t be presenting the authentic context? Oh Alice, the illustrations are not great, and they aren’t helped by the poor print layout either…

Witnesses Ready

The aim of the next stage of the project, Capturing Text as I decided to call it, is to test some OCR platforms to create a usable digital text from the digitized page images of Aladore.

I have been talking a lot about the witnesses available online, and I was frustrated with the PDF versions. PDF is just not a very good format to work with and the quality was too variable. Although OCR is possible on PDF files, most of the programs are really set up to use individual page images. To get the best possible digital text, I need the best quality images I can get.

No one provides individual page images par se… but actually they do! Most online reader platforms are actually a container for serving up JPG pages. For example, check out the Internet Archive reader display of Aladore 1915, https://archive.org/stream/aladorehen00newbrich#page/n7/mode/2up

Right click on one of the pages and choose “view image”. You find a JPG! You can get better quality files by zooming in first then viewing the image. If you study the resulting URL you be able to figure out the pattern for naming the individual page images and qualities.

I use the Free and open download manager DownThemAll! which is an extension for Firefox: http://www.downthemall.net

This allowed me to efficiently download a full set of images for the 1914 (from Internet Archive) and 1915 (from Hathi Trust) editions. While this is not the way IA and Hathi intended us to use the images stuck in the online readers, if we flipped through every page of the book it would result in exactly the same file use.

So, Awesome! Digital witnesses ready to go!

Public Domain

Lets take a moment to reflect about public domain and openness…

We can use the text of Aladore because it is in the public domain.

We need to thank the digitizers for creating the digital surrogate and the various hosts for serving us up all the files.

Imagine how much entertainment and creativity this simple action supports!

However, it is worth questioning the format the text is offered in. Digitized files are too often held possessively, locked in unusable formats to prevent fully free access. For example, compare the online reader at Scholars Portal Books (http://books1.scholarsportal.info/viewdoc.html?id=75462#tabview=tab1) with the Internet Archive reader. The procedure to download JPG’s mentioned in the last post is impossible with this reader because of the way the images are embedded. Despite the fact that it relies on exactly the same type of content server, the JPGs have been carefully hidden by the java script. Hathi and Scholars Portal Books provide access only via the online reader or PDF download. In contrast, Internet Archive offers multiple formats for download. We have seen that these formats are low quality due to the poor OCR and lack of editing–but at least they attempt to make the text more digitally open.

I think this type of openness is important moving forward with digitized materials–freeing the text from the printed page to take full advantage of the digital medium. In software there is often a distinction made between Gratis (free of charge) software and Libre software. Libre software is Free in terms of user’s rights (liberty), not only the monetary cost (see: http://www.fsf.org/about/what-is-free-software). Public domain books in image based PDFs are free, but not really Libre, because the format is so limiting. If the text is provided in a more open format, such as XML, it opens new worlds of analysis where the text can be broken free of the page–aggregated, viewed, remixed, or experienced in totally new ways.

We should also think about copyright for a minute: if you check out this chart outlining copyright in USA created by Cornell, https://copyright.cornell.edu/resources/publicdomain.cfm you will notice that just decade after Aladore was published, copyright becomes a lot less clear–a ridiculous legal mess and a more restrictive future. The hording of intellectual property is now a legal reality and big business model. Canada’s copyright law is slightly simpler, for example skim this guide from University of British Columbia, http://copyright.ubc.ca/guidelines-and-resources/support-guides/public-domain. It is still an absolute maze when it comes to films, orphan works, or books that contain other public domain text or artwork.

I think it is unfortunate that our culture continues to strengthen the powers of copyright. At first glance copyright seems like a provision designed to protect authors. However, in the current model the scales have tipped to allow traditional hoarders of capitol to hoard even more capitol (intellectual capitol). Its not about authors or creativity. And it does not acknowledge the collective, cumulative, and interactive nature of creation. Nothing is an original. Yet, companies like Disney are starting to make copyright claims about ancient cultural stories such as the Little Mermaid…

Here is an amusing video if you would rather watch something funny about copyright, “A Fair(y) Use Tale”: http://youtu.be/YLGNVIF0AYU

Or maybe this interesting documentary “Everything is a Remix”: http://youtu.be/d9ryPC8bxqE

If you want to see some great articles that highlight digitized resources in the public domain, check out Public Domain Review. They do a good job of finding fascinating things and providing some context to understand the objects: http://publicdomainreview.org

Scanning Error Discovery!

I mentioned earlier that the scanned version of Aladore 1914 had 418 pages while the 1915 edition had 416 pages. There wasn’t any obvious differences between the front and back matter, so it meant somewhere in there the 1915 scan was missing two pages… scary!

To try to discover the difference, it would be easiest to have the two PDF’s side-by-side or overlaid to visually compare. A side-by-side view is a feature of many paid full version PDF tools, such as Adobe Acrobat or Nitro Pro. This still requires turning pages on each PDF separately which can get annoying if the problem is a few hundred pages in! I am not aware of any free PDF reader that has this view. A paid option (with a free trial) that specializes in this task is DiffPDF. However, as usual, I want to steer towards as open as possible instead.

An open source alternative is (the quite similarly named) diff-pdf, http://vslavik.github.io/diff-pdf. It is not very polished, but is pretty neat once you figure it out. Basically, download the package and unzip. The program is run from the command line. To make things simple, I put the two Aladore PDFs I wanted to compare into the diff-pdf directory. I then ran the command:

[file path]\diff-pdf.exe --view aladore_1914_IA.pdf aladore_1915_IA.pdf

This analyses the listed files and starts up a GUI visualization of the two PDFs overlaid. It looks like this:

Luckily, the discrepancy between the two scanned editions was easy to find–it was immediately obvious in diff-pdf when the editions got out of sync. It turns out the 1915 scan is missing pages viii and ix, in the table of contents. This is a typical scanning error: basically the pages probably stuck together, and the operator didn’t notice when turning the page. Luckily it was in the table of contents and not the text itself.

Nice to have that mystery solved!

PDF Reading

I messed around a lot with PDFs during this project, but then decided to avoid using them during processing. However, I thought I should write a post about PDFs since we all use them everyday.

Many people don’t realize there are alternatives to Adobe Reader. Issues with Adobe data breaches or privacy invasions of the new Adobe Digital Editions 4.0 might make you consider switching to something else… PDFreaders.org provides a list of Free Software PDF readers so you can replace the slightly creepy, proprietary Adobe with something that respects your freedom.

In the past I used Foxit Reader (freeware). I really liked the interface and features, but Foxit is also proprietary and has some issues with privacy and unwanted software bundled in installs and updates. I was bothered by an update that suddenly opted me into their cloud service with no explanation. They have recently contributed to the open source community, as code for the Foxit rendering engine is the basis for Google’s PDFium project. None-the-less, I quit Foxit…

On Windows, I now use Sumatra PDF as my everyday reader (which is not listed at PDFreaders.org, but is open source). You can download the application from the main site: http://blog.kowalczyk.info/software/sumatrapdf/free-pdf-reader.html, or look at the code (GPLv3) here: https://code.google.com/p/sumatrapdf.

Sumatra is very simple and striped down. Unlike Adobe Reader, it starts up very quickly and doesn’t run any background helpers, definitely no bloat. It is also more flexible: it can read PDF, ePub, Mobi, XPS, DjVu, CHM, CBZ, and CBR files, so it is a great all around reader. On rare occasions the PDF rendering is slower than Adobe or Foxit, usually when the page images are highly compressed in the PDF container. Sumatra provides a message saying “page is rendering”, which is an improvement over Adobe where you can scroll through a complex document and find mysteriously empty pages that may later appear. Similarly, Sumatra never mysteriously freezes while sorting complex rendering out–it just honestly tells you it is working on it! I appreciate full user feedback!

On Linux, almost everyone already has some open source reader. I use Evince as a basic all-around reader, https://wiki.gnome.org/Apps/Evince. If you like it, Evince is easy to install on Windows as well, but it honestly runs better on Linux.

Here are some other handy utilities I found useful for manipulating PDFs while working on Digital Aladore:

- Did you know you can open any PDF with LibreOffice Draw? This enables you to annotate and edit the PDF and save it in different formats. VERY HANDY!

- PDF Shaper (freeware from GloryLogic) is an easy to use set of PDF manipulating tools. It offers features such as splitting, combining, extracting images, and conversions. Slick and smooth interface.

- K2PDF is an open source tool focused on reformatting PDFs for ereaders or mobile devices. Very useful for converting multi-column texts into simpler PDFs.

- jPDF Tweak is a GNU open source project that is the self proclaimed “Swiss Army Knife for PDF files.” The learning curve is steep, but it can do an amazing amount of Tweaks!

What applications do you use for PDF Reading, editing, and creation?